Ob Umwelt- und Naturwissenschaften, Physik, Medizin, Ingenieurswesen oder Archäologie und Kunst: Sie alle brauchen für Simulationen, Berechnungen und Visualisierungen viel Rechenkraft und daher leistungsfähige Computer. Tauchen Sie mit unseren Berichten ein in die Welt von Wissenschaft und Forschung. Entdecken Sie neue Galaxien, das Innerste des Menschen, die Folgen von Klimawandel, historische Säle und Bauten, faszinierende Kunstwerke und mehr. Oder lesen Sie von neuesten Errungenschaften in der IT.

100 Arbeitstage hat das neue CoolMUC-System bald erfolgreich absolviert: Die ersten praktischen Erfahrungen mit dem…

Wie kann Europa im Bereich IT und Computing eigenständiger werden? Diese und weitere Fragen treiben die europäische HPC…

Professor Luciano Rezzolla erhält den HPC Excellence Award.

Das Leibniz-Rechenzentrum lädt drei renommierte Fachleute aus der IT-Wirtschaft in seinen neuen Expertenrat ein, um die…

BayernKI, HammerHAI, Blue Lion – 2024 wurden viele technische Ressourcen angekündigt. 2025 werden diese Pläne am LRZ und…

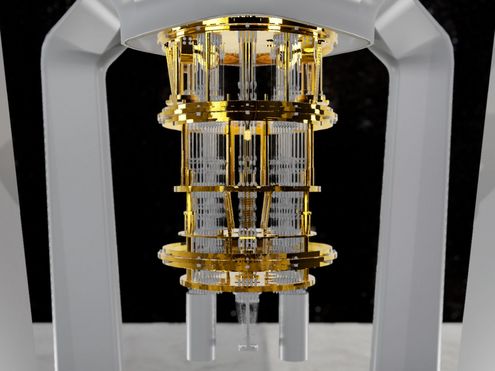

Die Fernsehsendung 3Sat Nano ließ sich am LRZ erklären, wie Quantencomputer funktionieren.

Mit BayernKI bieten LRZ und NHR@FAU schnellen und flexibel skalierbaren Zugang zu leistungsstarker Infrastruktur.

LRZ-Leiter Dieter Kranzlmüller diskutierte auf dem DLD25 über die Chancen und Möglichkeiten der Münchner Quanten-Szene.

Der neue Supercomputer Blue Lion ist Teil der nationalen Infrastruktur für Höchstleistungsrechnen des Gauss Centre for…