Artificial Intelligence has established itself in high-performance computing and provides the sciences with a plethora of new methods for analysing data. That is why we provide the LRZ’s AI systems, host partners’ AI platforms and evaluate innovative AI chips for potential use in research.

From the natural sciences to the humanities: Artificial Intelligence (AI) is now in constant interdisciplinary use. Their methods can be used to comprehensively analyse and recalculate Big Data. AI also complements the various methods in high-performance computing and expands conventional simulations to include statistical analyses where mathematics and digital programming continue to hit their limits. With its LRZ AI systems, the LRZ provides researchers with powerful clusters equipped with Graphics Processing Units (GPU).

Strong partnerships create even more opportunities: BayernKI, the Bavarian IT infrastructure for AI and the European AI factory HammerHAI provides additional AI resources and supplement the range of training courses and workshops. Last but by no means least, partnerships with the Munich Centre for Machine Learning (MCML) and the German Aerospace Centre (DLR) are improving their range of AI products as well as their experience with AI.

The new technology is developing dynamically, but the energy requirements of AI are very high, and AI models are often unreliable: This raises research questions for which the LRZ is seeking answers in collaboration with renowned and international institutes.

Analysing measured values, developing models, calculating simulations: If research can process its observations efficiently, we will be able to increase our knowledge more quickly and better solve the challenges of the present. That is why we do more than just provide scientists and researchers with high-performance technology and innovative tools so that they can reduce the time it takes to acquire knowledge from research. Above all, we focus on personal support and a comprehensive, up-to-date training programme: At LRZ, you can learn programming languages as well as learn how to use high-performance and AI systems. And it goes without saying that LRZ specialists are continuously optimising the computing power available, the infrastructure for data management and the user-friendliness of technology and tools.

The LRZ’s AI systems are made up of various, high-performance cluster segments with Graphics Processing Units (GPU) from e.g. NVIDIA. The LRZ provides a practical software stack for developing your own models as well as tried and tested data sets and common AI applications such.

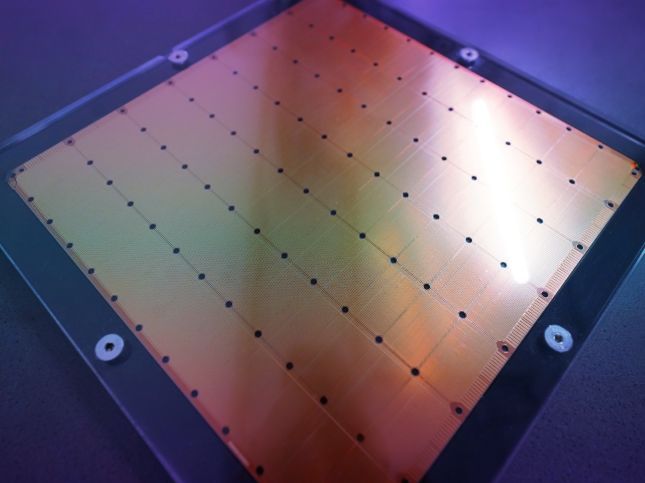

Cerebras Systems has developed a chip specially designed for working with and training large language models: The Wafer Scale Engine 2 (WSE-2). It is the size of a pizza box and contains 2.6 billion transistors, 850,000 computing cores and around 40 gigabytes of fast, static main memory. When working with Large Language Models (LLM), data can flow here and results can be temporarily stored and recalculated. Together with researchers, the LRZ is evaluating a CS-2 system with the WSE for its potential use in science.

Not only does AI need powerful computers, it also needs flexible storage solutions. Regardless whether we’re talking about computer vision or language models – anyone who works with AI needs to prepare AI systems for their tasks. The training runs iteratively or in batch mode, switching from calculation or analysis to storing immediate results. The LRZ’s AI systems have amongst others an interactive web server for these work steps and can be connected to the Data Storage System (DSS) and LRZ Cloud Storage. This creates a flexible, scaleable environment equipped to process a wide range of Big Data tasks.

Researching and developing AI methods and models in Bavaria: The Free State of Bavaria first established around 130 AI professorships as part of its high-tech agenda, followed by BayernKI: This high-performance infrastructure is housed at both the LRZ and Erlangen National High-Performance Computing Centre (NHR@FAU) and connected by a fast data line. Here, researchers of all disciplines get fast and flexibly scaleable access to AI technology.

EuroHPC Joint Undertaking is funding an AI factory in Germany of which the LRZ is a partner: HammerHAI is expected to meet the demand for computing resources for AI methods in research, industry and the public sector. To this end, the High-Performance Computing Centre (HLRS) in Stuttgart is working with the LRZ and other research institutes to create AI-optimised supercomputing infrastructure and provide support. The LRZ advises users and runs training courses on how to use AI.

The LRZ is closely linked to the Munich Centre for Machine Learning (MCML), not just through its Board of Directors but also by hosting AI technology for the MCML. This is structured in a similar way to the LRZ’s AI systems and, if required, can be quickly connected should more computing power be needed for AI applications.

They should be trustworthy, as versatile as possible and useful for research: However, we’re still a long way off achieving this dream when it comes to AI applications. The Trillion Parameter Consortium (TPC) was founded by the Argonne National Lab in the US and brings together around 60 research institutes from around the world to develop reliable, generative AI models for science.

With BayernKI, LRZ and NHR@FAU offer fast and flexibly scalable access to high-performance infrastructure.

New supercomputer “Blue Lion” is part of the German national HPC infrastructure of the Gauss Center for Supercomputing…

With the CS-2 system from Cerebras, AI models for the recognition of hate speech on social media platforms can be…

We research the latest computer and storage technologies as well as Internet tools. In collaboration with partners, we develop technologies for future computing, energy-efficient computing and IT security, as well as tools for data analysis and the development of artificial intelligence systems. Here is an overview of all our research projects.

HammerHAI (Hybrid and Advanced Machine Learning Platform for Manufacturing, Engineering, And Research @ HLRS) will…

DigiMed Bayern's goal is to guide Bavaria with a flagship project into this medicine of the future.

Am LRZ wird ein Forschungsinformationssystem (RIS) auf der Basis der Anwendung 'Bay.FIS' (HSWT) entwickelt.

The LRZ doesn’t just support researchers with AI technology and applications, we also advise and support you to collect and process data: Get in touch with the LRZ Big Data and AI team if you would like to know how to prepare, harmonise and ultimately implement the largest data sets on LRZ yourself. You can also rest assured that we will provide you with the resources that best suit your AI projects.